mechman

AV Addict

More

- Preamp, Processor or Receiver

- Pioneer VSX-832

- Streaming Subscriptions

- HBO Max, YouTubeTV, Hulu, Netflix, Disney+

- Front Speakers

- Definitive Technology Studio Monitor 55s

- Center Channel Speaker

- Definitive Technology CS8040

- Surround Back Speakers

- Definitive Technology DI6.5R

- Other Speakers

- Apple TV 4K

- Video Display Device

- LG OLED65C7P

- Remote Control

- Logitech Harmony 650

HDR or High-Dynamic Range came shortly after the huge influx of HDTVs became common in most people’s living rooms or theaters. It replaces what most everyone is currently using which is the Standard-Dynamic Range (SDR) video display. It adds more depth creating what most folks consider an almost 3D look to the image and it matches more closely with what we see in the real world. Improvements made are to shadow detail and brightness detail across the whole color gamut. Everyone talks about 4K or Ultra High Definition TV (UHDTV) as being one of recent improvements to display. And while that’s true in a sense, the benefits are much less when it’s not implemented with HDR.

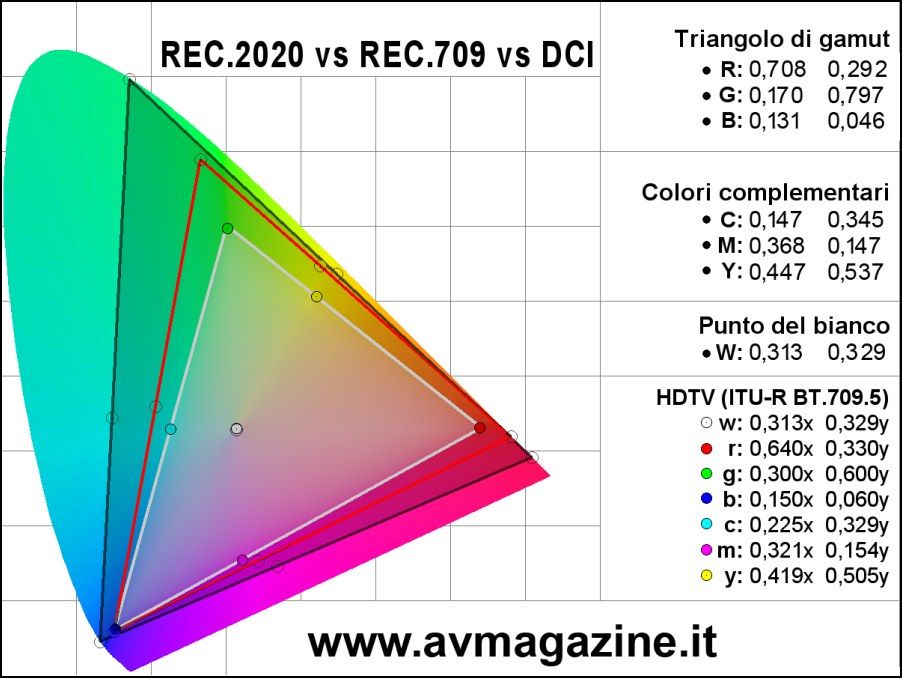

Displays up until recently have all been based upon Rec709 (BT.709) and Rec601 (BT.601) color spaces. These color spaces only contain a fraction of the visible color values or chromaticity. The newer color spaces under HDR, which include both DCI-P3 and Rec2020 (BT.2020), produce roughly half of the colors under the visible color values. Here's an image I found from an Italian magazine that depicts the gamuts nicely:

The outer green triangle is Rec2020 (Dolby Vision), the inner red triangle is DCI-P3 (HDR10) and the inner white triangle is Rec709 or the current standard. As of this writing, there are no displays that are Rec2020 capable. Rec2020 displays are slated to roll out in 2020.

Contrast ratio is another improvement made with the advent of HDR. HDR badged sets must be able to produce a range of brightness and darkness compatible within the particular standards they support. Ideally the contrast ratio would be 10,000nits to 0nits. Currently though LED/LCD displays produce a range of 1100nits to .05nits with OLED technology producing 540nits to .0005nits. Needless to say, that's quite an improvement in contrast ratio over older displays.

So what you will see in the future to come is a greater dynamic range and a much improved color depth.

There are currently five different standards for HDR floating around.

Dolby Vision

If you read Todd’s review of the 2017 TV Shootout, you saw where he mentioned that Dolby Cinema content is mastered on the big screen. Normally, content is mastered in studio on a monitor such as the Sony BVM-X300 that was on display at the shootout. Dolby Vision uses the REC2020 color standard which is slightly larger than the HDR10 (DCI-P3) format. This is a proprietary format mastered under Dolby’s higher standards at a higher cost. While I have been out of the loop for a few years, it’s my understanding that there isn’t a lot of Dolby Vision content available at this time outside of some Netflix and VUDU content. And not all manufacturers have not all yet incorporated it into their models. I believe it’s currently limited to LG OLEDs and some current Vizio models. There are a few Dolby Vision discs on the market right now including Despicable Me and Despicable Me 2.

Dolby Vision allows up to 12 bit color or 68.7 billion colors. Bit depth is basically color depth or the gradation of colors. With 12 bit color, each pixel has 12 bits of data per color or 212 or 8,192 variations of each color.

HDR10

HDR10 is an open source format that any manufacturer can implement into their display at no cost. It is most widespread as well. All HDR Blu-ray discs are currently HDR10 discs. There is also content on Netflix and Amazon Video. HDR10 utilizes the smaller DCI-P3 gamut at 10 bit color depth - 10 bit color or 210 = 1024 variations of each color. HDR10 incorporates static metadata that is set at the start of the content and doesn't change throughout.

HDR10+

HDR10+ was announced this last spring by both Samsung and Amazon. HDR10+ improves upon HDR10 by adding dynamic metadata encoded within the scenes to compete more closely with Dolby Vision. It adjusts the brightness or darkness of scenes on a frame by frame basis. Amazon plans on introducing HDR10+ content later this year.

HLG

HLG stands for Hybrid Log-Gamma. It is being developed by NHK and the BBC to serve as the live video format. It is royalty free and backwards compatible with SDR displays. YouTube supports this format. This format will be independent of metadata.

Advanced HDR

Advanced HDR is being formatted for broadcast TV and for upscaling SDR content to HDR. The intent is for this format to be cross platform. Which means that it should work with any of the other standards' hardware and it will be easier to implement

Whether or not you think you should adapt to HDR or not will be your own personal preference. The main thing that you should keep in mind is that Dolby Vision will play on an HDR set and neither HLG nor Advanced HDR will require you to buy new hardware. The formats are cross compatible. Some of what I have learned over the last week or so is that HDR is not implemented equally and also that it can be finicky as well. Regardless, hopefully this will help you when decision time draws near.

Displays up until recently have all been based upon Rec709 (BT.709) and Rec601 (BT.601) color spaces. These color spaces only contain a fraction of the visible color values or chromaticity. The newer color spaces under HDR, which include both DCI-P3 and Rec2020 (BT.2020), produce roughly half of the colors under the visible color values. Here's an image I found from an Italian magazine that depicts the gamuts nicely:

The outer green triangle is Rec2020 (Dolby Vision), the inner red triangle is DCI-P3 (HDR10) and the inner white triangle is Rec709 or the current standard. As of this writing, there are no displays that are Rec2020 capable. Rec2020 displays are slated to roll out in 2020.

Contrast ratio is another improvement made with the advent of HDR. HDR badged sets must be able to produce a range of brightness and darkness compatible within the particular standards they support. Ideally the contrast ratio would be 10,000nits to 0nits. Currently though LED/LCD displays produce a range of 1100nits to .05nits with OLED technology producing 540nits to .0005nits. Needless to say, that's quite an improvement in contrast ratio over older displays.

So what you will see in the future to come is a greater dynamic range and a much improved color depth.

There are currently five different standards for HDR floating around.

Dolby Vision

If you read Todd’s review of the 2017 TV Shootout, you saw where he mentioned that Dolby Cinema content is mastered on the big screen. Normally, content is mastered in studio on a monitor such as the Sony BVM-X300 that was on display at the shootout. Dolby Vision uses the REC2020 color standard which is slightly larger than the HDR10 (DCI-P3) format. This is a proprietary format mastered under Dolby’s higher standards at a higher cost. While I have been out of the loop for a few years, it’s my understanding that there isn’t a lot of Dolby Vision content available at this time outside of some Netflix and VUDU content. And not all manufacturers have not all yet incorporated it into their models. I believe it’s currently limited to LG OLEDs and some current Vizio models. There are a few Dolby Vision discs on the market right now including Despicable Me and Despicable Me 2.

Dolby Vision allows up to 12 bit color or 68.7 billion colors. Bit depth is basically color depth or the gradation of colors. With 12 bit color, each pixel has 12 bits of data per color or 212 or 8,192 variations of each color.

HDR10

HDR10 is an open source format that any manufacturer can implement into their display at no cost. It is most widespread as well. All HDR Blu-ray discs are currently HDR10 discs. There is also content on Netflix and Amazon Video. HDR10 utilizes the smaller DCI-P3 gamut at 10 bit color depth - 10 bit color or 210 = 1024 variations of each color. HDR10 incorporates static metadata that is set at the start of the content and doesn't change throughout.

HDR10+

HDR10+ was announced this last spring by both Samsung and Amazon. HDR10+ improves upon HDR10 by adding dynamic metadata encoded within the scenes to compete more closely with Dolby Vision. It adjusts the brightness or darkness of scenes on a frame by frame basis. Amazon plans on introducing HDR10+ content later this year.

HLG

HLG stands for Hybrid Log-Gamma. It is being developed by NHK and the BBC to serve as the live video format. It is royalty free and backwards compatible with SDR displays. YouTube supports this format. This format will be independent of metadata.

Advanced HDR

Advanced HDR is being formatted for broadcast TV and for upscaling SDR content to HDR. The intent is for this format to be cross platform. Which means that it should work with any of the other standards' hardware and it will be easier to implement

Whether or not you think you should adapt to HDR or not will be your own personal preference. The main thing that you should keep in mind is that Dolby Vision will play on an HDR set and neither HLG nor Advanced HDR will require you to buy new hardware. The formats are cross compatible. Some of what I have learned over the last week or so is that HDR is not implemented equally and also that it can be finicky as well. Regardless, hopefully this will help you when decision time draws near.

Last edited:

).

).