-

AUDIO VIDEO PROCESSING, SETUP & ENVIRONMENTOfficial REW (Room EQ Wizard) Support Forum Audiolense User Forum Calibration Equipment Auto-EQ Platforms / Immersive Audio Codecs Video Display Technologies / Calibration AV System Setup and Support Listening Room / Home Theater Build Projects Room Acoustics and Treatments AV Showcase Movies / Music / TV / Streaming

-

AUDIO VIDEO DISCUSSION / EQUIPMENTHome Theater / Audio and Video - Misc Topics Essence For Hi Res Audio AV Equipment Advice and Pricing Awesome Deals and Budget AV Equipment AV Receivers / Processors / Amps UHD / Blu-ray / CD Players / Streaming Devices Two Channel Hi-Fi Equipment DIY Audio Projects Computer Systems - HTPC / Gaming HD and UHD Flat Screen Displays Projectors and Projection Screens AV Accessories Buy - Sell - Trade

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FSAF (Fast subband adaptive filtering) measurement

- Thread starter John Mulcahy

- Start date

John Mulcahy

REW Author

Thread Starter

- Joined

- Apr 3, 2017

- Posts

- 9,136

No.

I'm a fan of M-Noise for general noise stimulus that resembles audio spectrum, a lot better than pink noise anyway. I think Stoneeh's trying to compare result of FSAF to a multitone IMD type of measurement, so needs to keep the magnitude spectrum and crest factor similar or it's not a very equal comparison.

The only reason for the existence of simplified tests like sine sweep and multi-tone IMD tests is their low computational load. Their interpretation in terms of music is ... debatable. Many self-proclaimed experts claim that by looking at FR, harmonics, and spinograms, they can predict how a loudspeaker will sound in a given room. Others call it snake oil.

FSAF comes from the opposite direction. It allows you to separate distortions from the original, on the music you listen to. Then you are free to come up with simplified tests that are consistent with your perception of the distortions that are meaningful to you (because each person is unique hearing-wise). Not vice versa.

FSAF comes from the opposite direction. It allows you to separate distortions from the original, on the music you listen to. Then you are free to come up with simplified tests that are consistent with your perception of the distortions that are meaningful to you (because each person is unique hearing-wise). Not vice versa.

It allows you to separate distortions from the original, on the music you listen to

what does "original" refer to here please?

What level of explanation do you need? PhD or 5 years old?

The "original" does not refer to master tapes or acoustic fields in the recording studio. It refers to the digital recordings available to the end-user. It is not a problem to equalize your acoustic system to a picture-perfect, ruler-flat FR (say, 1m on the axis, integrated over a sphere by an omnidirectional mic). This signal is supposed to be a linear-time-invariant (LTI) transformation of the digital recordings - but it is not. The differences from the LTI are what FSAF sees as distortions. Does this explanation suffice?

The "original" does not refer to master tapes or acoustic fields in the recording studio. It refers to the digital recordings available to the end-user. It is not a problem to equalize your acoustic system to a picture-perfect, ruler-flat FR (say, 1m on the axis, integrated over a sphere by an omnidirectional mic). This signal is supposed to be a linear-time-invariant (LTI) transformation of the digital recordings - but it is not. The differences from the LTI are what FSAF sees as distortions. Does this explanation suffice?

I guess 5 years old is my level, since @dcibel made me understand lol

thanks to both

now this was very usefull, thanks

thanks to both

is not a problem to equalize your acoustic system to a picture-perfect, ruler-flat FR (say, 1m on the axis, integrated over a sphere by an omnidirectional mic). This signal is supposed to be a linear-time-invariant (LTI) transformation of the digital recordings - but it is not. The differences from the LTI are what FSAF sees as distortions. Does this explanation suffice?

now this was very usefull, thanks

"misuse and abuse" is exactly what I am looking for.

I think I could be this guy.

Can impulses be used as a source? My first idea for a misuse would be to hear my room reverberation isolated

John, if the FSAF measurement was done from a file, what would the TD+N distortion graph show, the sum of all distortions plus noise? If the signal received by the microphone differs from the file, how can I highlight this difference on the graph? Maybe the TD+N graph is the difference graph?

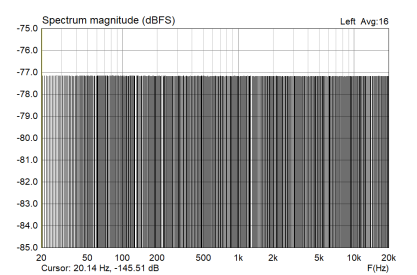

Distortion graph for FSAF is a spectrum of the residual. So its total distortion + noise for the duration of the audio, regardless of what the input audio is. For more detailed analysis, listen to the residual audio, and/or "load FSAF residual", then view in spectrogram.

Popular tags

20th century fox

4k blu-ray

4k uhd

4k ultrahd

action

adventure

animated

animation

bass

blu-ray

calibration

comedy

comics

denon

dirac

dirac live

disney

dolby atmos

drama

fantasy

hdmi 2.1

home theater

horror

kaleidescape

klipsch

lionsgate

marantz

movies

onkyo

paramount

pioneer

rew

romance

sci-fi

scream factory

shout factory

sony

stormaudio

subwoofer

svs

terror

thriller

uhd

ultrahd

ultrahd 4k

universal

value electronics

warner

warner brothers

well go usa